Deploying AWX in Kubernetes with AWX Operator

A while back I deployed AWX to run ansible playbooks (this is covered in Setting Up and Using AWX with docker-compose). I wanted to refresh that configuration since the deployment model has switched.

Deploy PostgreSQL in K8S

I recently deployed OpenEBS to allow creation of Persistent Volumes that have decent performance (this setup is covered in Using cStor from OpenEBS). Using that as my storage backend I deployed a postgres deployment in kubernetes with persistent storage:

apiVersion: apps/v1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

selector:

matchLabels:

app: postgres

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:11

imagePullPolicy: IfNotPresent

ports:

- containerPort: 5432

name: postgres

protocol: TCP

env:

- name: POSTGRES_PASSWORD

valueFrom:

secretKeyRef:

name: postgres

key: password

- name: PGDATA

value: /var/lib/postgresql/data/pgdata

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: postgres-data-vol

volumes:

- name: postgres-data-vol

persistentVolumeClaim:

claimName: posgres-data-pvc

There are actually a nice of example of a postgres deployment in kubernetes:

- How to use Kubernetes to deploy Postgres

- Deploying PostgreSQL as a StatefulSet in Kubernetes

- How to Deploy PostgreSQL on Kubernetes

Mine was deployed and ready to go:

> k get all -l app=postgres

NAME READY STATUS RESTARTS AGE

pod/postgres-868f4bdc9b-9d8r4 1/1 Running 0 4h50m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/postgres ClusterIP 10.104.155.189 <none> 5432/TCP 3d4h

NAME DESIRED CURRENT READY AGE

replicaset.apps/postgres-868f4bdc9b 1 1 1 3d4h

Install the AWX Operator

Most of the setup is in the readme. Here are the quick steps:

$ git clone https://github.com/ansible/awx-operator.git

$ cd awx-operator

$ export NAMESPACE=awx

$ make deploy

Make sure you have make and kustomize installed on the machine. After that runs, you should see the controller deployed:

$ kubectl get pods -n $NAMESPACE

NAME READY STATUS RESTARTS AGE

awx-operator-controller-manager-66ccd8f997-rhd4z 2/2 Running 0 11s

Deploy AWX

I ended up creating the following configuration:

apiVersion: awx.ansible.com/v1beta1

kind: AWX

metadata:

name: awx

spec:

service_type: ClusterIP

postgres_configuration_secret: awx-postgres-configuration

extra_volumes: |

- name: ansible-cfg

configMap:

defaultMode: 420

items:

- key: ansible.cfg

path: ansible.cfg

name: awx-extra-config

ee_extra_volume_mounts: |

- name: ansible-cfg

mountPath: /etc/ansible/ansible.cfg

subPath: ansible.cfg

# control_plane_ee_image: elatov/awx-custom-ee:0.0.2

ee_images:

- name: custom-awx-ee

image: elatov/awx-custom-ee:0.0.4

You might not need the ee_images options, and I will cover those later. You can checkout the samples for the postgres config map at External PostgreSQL Service and the extra volumes at Custom Volume and Volume Mount Options. When I tried to create my AWX deployment, it would fail and the issue was with the fact that I didn’t have a postgres db created, this is covered in Need some assistance with AWX external postgresql db. Here are the commands to create the database and credentials:

> k exec -it $(k get pods -l app=postgres -o name) -- /bin/bash

bash-5.1# psql -h localhost -U postgres

psql (14.1)

Type "help" for help.

postgres=# CREATE USER awx WITH ENCRYPTED PASSWORD 'awxpass';

postgres=# CREATE DATABASE awx OWNER awx;

After that the deployment finished and I had an AWX instance deployed:

> k get pods -n awx

NAME READY STATUS RESTARTS AGE

awx-9b6df9459-7p79d 4/4 Running 0 2d5h

awx-operator-controller-manager-7f56f8b69c-fqqds 2/2 Running 0 2d5h

Create a user manually for AWX Web UI

There is a known issue where the default credentials don’t work, even if you follow the instuctions to get the password:

$ k -n awx get secret awx-admin-password -o jsonpath="{.data.password}" | base64 -d

So I just created one manually:

> k exec -n awx -it $(k get pods -n awx -l app.kubernetes.io/component=awx -o name) -c awx-web -- /bin/bash

bash-4.4$ awx-manage createsuperuser

Username: test

Email address:

Password:

Password (again):

Superuser created successfully.

Then I followed similar instructions as in my previous post to configure the project, templates, and credentials. There are also other sites that cover the process pretty well:

- Install Ansible AWX on CentOS 8 / Rocky Linux 8

- Getting started Ansible AWX tower for IT automation run first playbook

Adding Custom Roles and Collections

There have been changes on how to include custom collections and role into your ansible playbooks in awx. These pages covers the setup:

So I just ended up creating the following in my git repo:

> cat collections/requirements.yml

collections:

- name: community.crypto

- name: community.general

- name: community.hashi_vault

- name: ansible.posix

> cat roles/requirements.yml

roles:

- name: robertdebock.epel

Then after doing a project sync, my playbooks were able to use all the necessary collections.

Creating a Custom AWX_EE Image

I was using a hashicorp vault to populate some of the variables and that kept failing since the hvac python module is not included in the default EE image. I ran into the following issue and it looks like the way to handle that is to create a custom EE image and to include the module there. I ran into a couple of sites that described the process:

- Building Kubernetes-enabled Tower/AWX Execution Environments Using AWX-EE and ansible-builder

- Execution Environments

- Creating a custom EE for AWX

First let’s get the prereqs:

> pip install --user ansible-builder

> git clone git@github.com:elatov/awx-ee.git

> cd awx-ee

I ended up with the following configs:

> cat execution-environment.yml

---

version: 1

dependencies:

galaxy: _build/requirements.yml

python: _build/requirements.txt

system: _build/bindep.txt

build_arg_defaults:

EE_BASE_IMAGE: 'quay.io/ansible/ansible-runner:stable-2.11-latest'

additional_build_steps:

append:

- RUN alternatives --set python /usr/bin/python3

- COPY --from=quay.io/project-receptor/receptor:latest /usr/bin/receptor /usr/bin/receptor

- RUN mkdir -p /var/run/receptor

- ADD run.sh /run.sh

- CMD /run.sh

- USER 1000

- RUN git lfs install

And here is the file where you can add your custom python modules:

> cat _build/requirements.txt

hvac

I ended up customizing the base image and this was to ensure a development version is not included in the image. This is actually discussed in this issue. To build the image, you can run the following:

ansible-builder build --tag elatov/awx-custom-ee:0.0.4

For the tag specify your container registry. You can then test the image locally really quick:

> docker run --user 0 --entrypoint /bin/bash -it --rm elatov/awx-custom-ee:0.0.4

bash-4.4# git clone git@github.com:elatov/ansible-tower-samples.git

bash-4.4# cd ansible-tower-samples

bash-4.4# ansible-playbook -vvv hello_world.yml

If everything is working as expected then push the image to your registry:

> docker push elatov/awx-custom-ee:0.0.4

At this point you can either modify the awx-operator config and add the following section:

---

spec:

...

ee_images:

- name: custom-awx-ee

image: elatov/awx-custom-ee:0.0.4

Or you can just add the image manually in the UI as per the instructions in Use an execution environment in jobs.

Adding Mitogen in the Custom AWX_EE Image

While I was creating a custom image, I decided to include mitogen in it. This made me think it’s possible. So I modified the ansible-builder config to have the following:

> cat execution-environment.yml

---

version: 1

dependencies:

galaxy: _build/requirements.yml

python: _build/requirements.txt

system: _build/bindep.txt

build_arg_defaults:

EE_BASE_IMAGE: 'quay.io/ansible/ansible-runner:stable-2.11-latest'

additional_build_steps:

append:

- RUN alternatives --set python /usr/bin/python3

- COPY --from=quay.io/project-receptor/receptor:latest /usr/bin/receptor /usr/bin/receptor

- RUN mkdir -p /var/run/receptor

- RUN mkdir -p /usr/local/mitogen

- COPY mitogen-0.3.2 /usr/local/mitogen/

- ENV ANSIBLE_STRATEGY_PLUGINS=/usr/local/mitogen/ansible_mitogen/plugins/strategy

- ENV ANSIBLE_STRATEGY=mitogen_linear

- ADD run.sh /run.sh

- CMD /run.sh

- USER 1000

- RUN git lfs install

Initially I tried to specify the mitogen configs in the ansible.cfg file as described in Custom Volume and Volume Mount Options. But that broke the sync at the project level. The image used for the project syncing is different than the image used for the playbook runs. You can actually modify that as well as described here, you basically specify the following in your awx-operator config:

---

spec:

...

control_plane_ee_image: elatov/awx-custom-ee:0.0.4

For some reason I didn’t want to mess with that yet, in case I do an update I would want it to use the default image. Then I decided to modify my custom ee image build and set the environment variable at image level it self (as you see above), and that worked out great

Random mitogen notes

I was getting a weird issue where when mitogen was used, the playbook would fail to find the right collection. I then ran into this page and it helped out. Basically if I ran the playbook just by itself it worked, but if I ran multiple playbooks then it would fail. So I enabled debug mode on the working and and non-working one and I checked out the differences:

> diff job_122.txt job_125.txt | head

1c1

< Identity added: /runner/artifacts/122/ssh_key_data (root@nb)

---

> Identity added: /runner/artifacts/125/ssh_key_data (root@nb)

93c93

< Loading collection ansible.posix from /runner/project/collections/ansible_collections/ansible/posix

---

> Loading collection ansible.posix from /runner/requirements_collections/ansible_collections/ansible/posix

104,357d103

< statically imported: /runner/project/roles/common/tasks/main-install.yaml

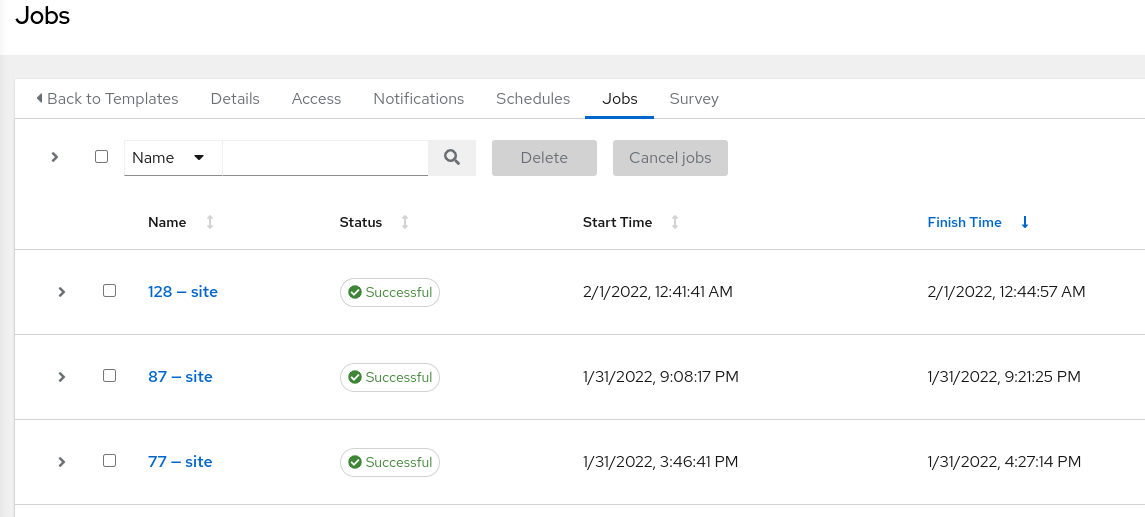

It turned out I had a custom collections/ansible_collection folder in my github repo. Notice how the working one was getting the collection directly from the project (/runner/project/collections/), while the working one was getting it from the location where requirements.yml is installed in (/runner/requirements_collections/). So I removed that from my git repo and then it started working. Also wanted to share the execution times of the playbooks in awx:

The very first one about 40 minutes, then after caching all the facts it was at 13 minutes and lastly with mitogen enabled it’s at 3 minutes :)